SBC 성능 비교(LattePanda 3 Delta, Jetson AGX Xavier)

LattePanda 3 Delta, Jetson AGX Xavier가 모두 모였다. 이들을 기준으로 한번 벤치마크를 해보자 한다.

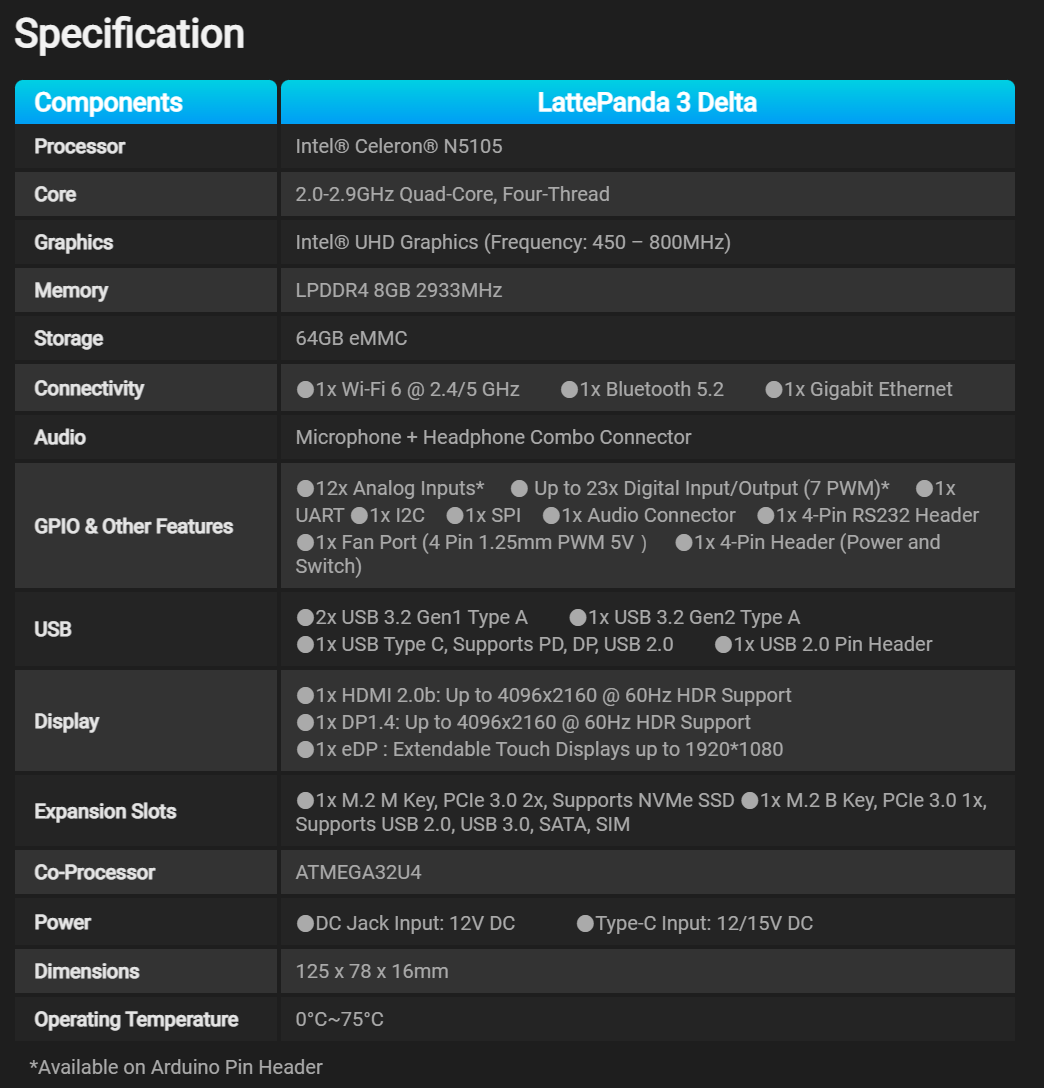

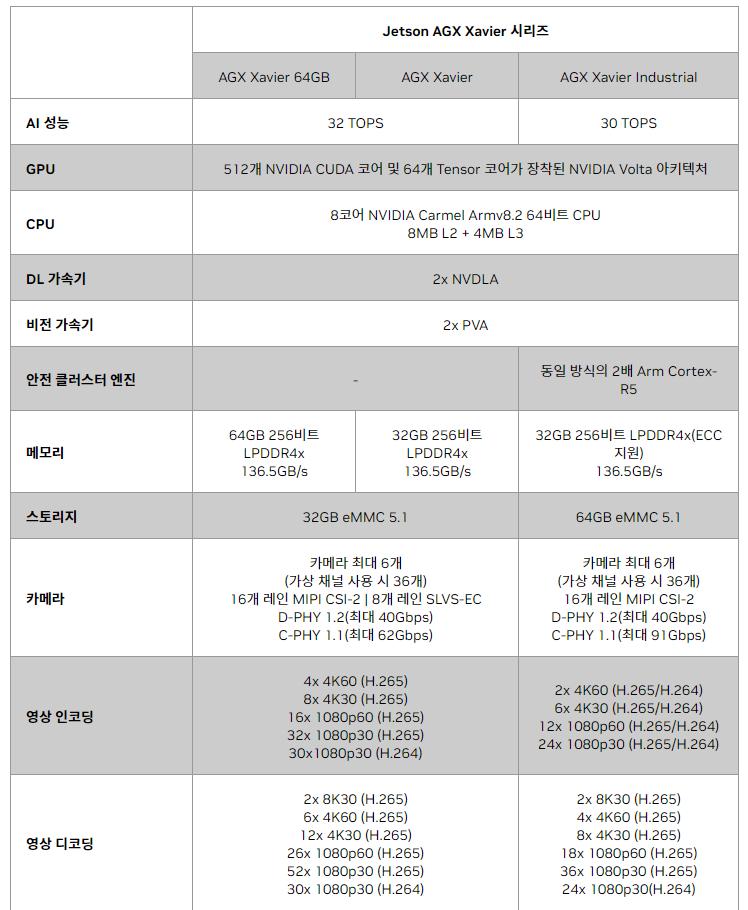

우선 공식 표기스펙 비교이다.

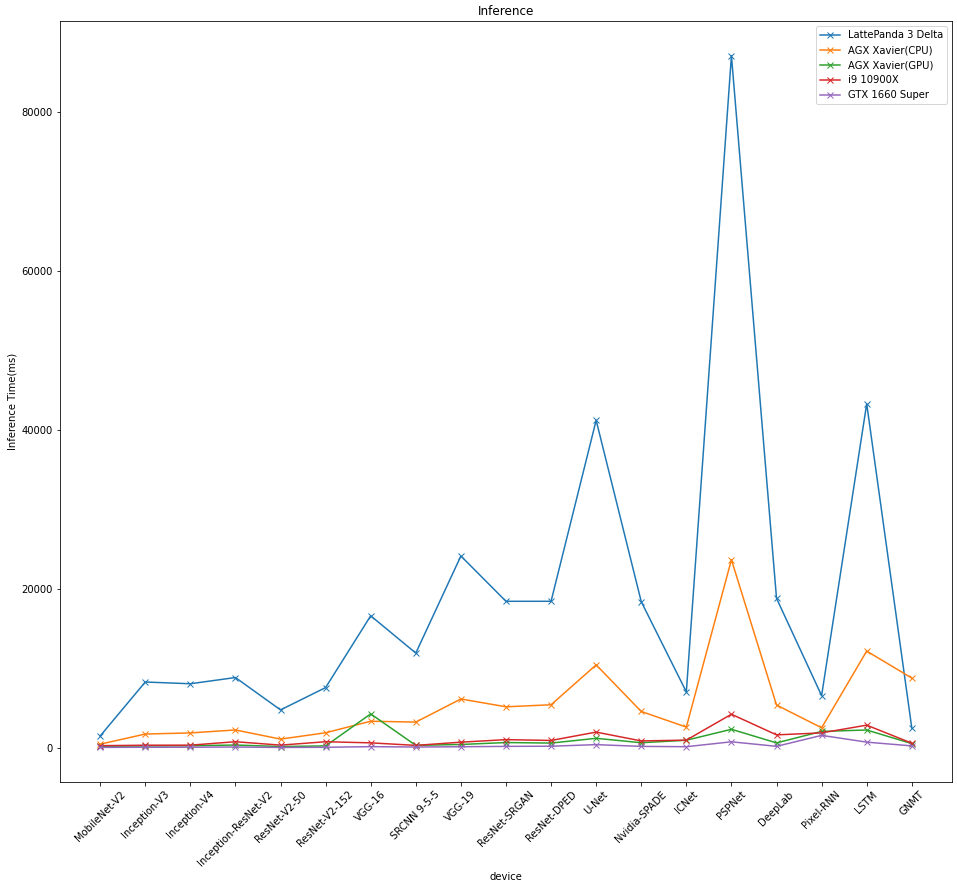

벤치마크 툴은 pypi의 ai-benchmark를 이용했다.

- MobileNet-V2

[classification] - Inception-V3

[classification] - Inception-V4

[classification] - Inception-ResNet-V2

[classification] - ResNet-V2-50

[classification] - ResNet-V2-152

[classification] - VGG-16

[classification] - SRCNN 9-5-5

[image-to-image mapping] - VGG-19

[image-to-image mapping] - ResNet-SRGAN

[image-to-image mapping] - ResNet-DPED

[image-to-image mapping] - U-Net

[image-to-image mapping] - Nvidia-SPADE

[image-to-image mapping] - ICNet

[image segmentation] - PSPNet

[image segmentation] - DeepLab

[image segmentation] - Pixel-RNN

[inpainting] - LSTM

[sentence sentiment analysis] - GNMT

[text translation]

이런 대표적인 모델들에 대한 성능 테스트가 가능하다.

테스트 환경

Jetson AGX Xavier

운영체제 : Jetson Linux Jetpack 5.0.2

도커 컨테이너 l4t-tensorflow 내에서 테스트

LattePanda 3 Delta

운영체제 : Ubuntu 22.04

도커 컨테이너 tensorflow 내에서 테스트

작업용 컴퓨터

운영체제 : Windows 11 22H2 WSL2 Ubuntu 22.04

CPU : i9-10900X

GPU : NVIDIA GTX 1660 Super

도커 컨테이너 tensorflow 내에서 테스트

MobileNet-V2(224x224, 50 batch)

Inception-V3(346x346, 20 batch)

Inception-V4(346x346, 10 batch)

Inception-ResNet-V2(346x346, 10 batch)

ResNet-V2-50(346x346, 10 batch)

ResNet-V2-152(256x256, 10 batch)

VGG-16(224x224, 20 batch)

SRCNN 9-5-5(512x512, 10 batch)

VGG-19 Super-Res(256x256, 10 batch)

ResNet-SRGAN(512x512, 10 batch)

ResNet-DPED(256x256, 10 batch)

U-Net(512x512, 4 batch)

Nvidia-SPADE(128x128, 5 batch)

ICNet(1024x1536, 5 batch)

PSPNet(720x720, 5 batch)

DeepLab(512x512, 2 batch)

Pixel-RNN(64x64, 50 batch)

LSTM-Sentiment(1024x300, 100 batch)

GNMT-Translation(1x20, 1 batch)

정확한 벤치마크 결과는 아래에 첨부한다.

Xavier CPU

1/19. MobileNet-V2

1.1 - inference | batch=50, size=224x224: 475 ± 20 ms

1.2 - training | batch=50, size=224x224: 2774 ± 99 ms

2/19. Inception-V3

2.1 - inference | batch=20, size=346x346: 1751 ± 30 ms

2.2 - training | batch=20, size=346x346: 6794 ± 302 ms

3/19. Inception-V4

3.1 - inference | batch=10, size=346x346: 1893 ± 35 ms

3.2 - training | batch=10, size=346x346: 7220 ± 210 ms

4/19. Inception-ResNet-V2

4.1 - inference | batch=10, size=346x346: 2264 ± 31 ms

4.2 - training | batch=8, size=346x346: 7020 ± 206 ms

5/19. ResNet-V2-50

5.1 - inference | batch=10, size=346x346: 1114 ± 10 ms

5.2 - training | batch=10, size=346x346: 4371 ± 23 ms

6/19. ResNet-V2-152

6.1 - inference | batch=10, size=256x256: 1910 ± 111 ms

6.2 - training | batch=10, size=256x256: 7974 ± 80 ms

7/19. VGG-16

7.1 - inference | batch=20, size=224x224: 3362 ± 31 ms

7.2 - training | batch=2, size=224x224: 5468 ± 65 ms

8/19. SRCNN 9-5-5

8.1 - inference | batch=10, size=512x512: 3251 ± 34 ms

8.2 - inference | batch=1, size=1536x1536: 2892 ± 48 ms

8.3 - training | batch=10, size=512x512: 19476 ± 468 ms

9/19. VGG-19 Super-Res

9.1 - inference | batch=10, size=256x256: 6159 ± 24 ms

9.2 - inference | batch=1, size=1024x1024: 9866 ± 150 ms

9.3 - training | batch=10, size=224x224: 23365 ± 207 ms

10/19. ResNet-SRGAN

10.1 - inference | batch=10, size=512x512: 5175 ± 59 ms

10.2 - inference | batch=1, size=1536x1536: 4605 ± 27 ms

10.3 - training | batch=5, size=512x512: 10238 ± 347 ms

11/19. ResNet-DPED

11.1 - inference | batch=10, size=256x256: 5430 ± 40 ms

11.2 - inference | batch=1, size=1024x1024: 8530 ± 39 ms

11.3 - training | batch=15, size=128x128: 8706 ± 64 ms

12/19. U-Net

12.1 - inference | batch=4, size=512x512: 10448 ± 36 ms

12.2 - inference | batch=1, size=1024x1024: 11629 ± 611 ms

12.3 - training | batch=4, size=256x256: 11040 ± 527 ms

13/19. Nvidia-SPADE

13.1 - inference | batch=5, size=128x128: 4587 ± 268 ms

13.2 - training | batch=1, size=128x128: 3933 ± 73 ms

14/19. ICNet

14.1 - inference | batch=5, size=1024x1536: 2624 ± 34 ms

14.2 - training | batch=10, size=1024x1536: 6753 ± 170 ms

15/19. PSPNet

15.1 - inference | batch=5, size=720x720: 23664 ± 690 ms

15.2 - training | batch=1, size=512x512: 8592 ± 454 ms

16/19. DeepLab

16.1 - inference | batch=2, size=512x512: 5396 ± 27 ms

16.2 - training | batch=1, size=384x384: 6101 ± 47 ms

17/19. Pixel-RNN

17.1 - inference | batch=50, size=64x64: 2538 ± 162 ms

17.2 - training | batch=10, size=64x64: 2114 ± 22 ms

18/19. LSTM-Sentiment

18.1 - inference | batch=100, size=1024x300: 12179 ± 52 ms

18.2 - training | batch=10, size=1024x300: 30321 ± 2331 ms

19/19. GNMT-Translation

19.1 - inference | batch=1, size=1x20: 8786 ± 149 ms

Device Inference Score: 336

Device Training Score: 390

Device AI Score: 726

Xavier GPU

1/19. MobileNet-V2

1.1 - inference | batch=50, size=224x224: 209 ± 24 ms

1.2 - training | batch=50, size=224x224: 557 ± 13 ms

2/19. Inception-V3

2.1 - inference | batch=20, size=346x346: 253 ± 2 ms

2.2 - training | batch=20, size=346x346: 947 ± 6 ms

3/19. Inception-V4

3.1 - inference | batch=10, size=346x346: 282 ± 2 ms

3.2 - training | batch=10, size=346x346: 1057 ± 3 ms

4/19. Inception-ResNet-V2

4.1 - inference | batch=10, size=346x346: 370.8 ± 0.9 ms

4.2 - training | batch=8, size=346x346: 1029 ± 2 ms

5/19. ResNet-V2-50

5.1 - inference | batch=10, size=346x346: 181.4 ± 0.9 ms

5.2 - training | batch=10, size=346x346: 597 ± 2 ms

6/19. ResNet-V2-152

6.1 - inference | batch=10, size=256x256: 260 ± 7 ms

6.2 - training | batch=10, size=256x256: 872 ± 2 ms

7/19. VGG-16

7.1 - inference | batch=20, size=224x224: 4282 ± 18 ms

7.2 - training | batch=2, size=224x224: 11399 ± 26 ms

8/19. SRCNN 9-5-5

8.1 - inference | batch=10, size=512x512: 327 ± 22 ms

8.2 - inference | batch=1, size=1536x1536: 404 ± 1 ms

8.3 - training | batch=10, size=512x512: 1079 ± 22 ms

9/19. VGG-19 Super-Res

9.1 - inference | batch=10, size=256x256: 439 ± 3 ms

9.2 - inference | batch=1, size=1024x1024: 910.3 ± 0.5 ms

9.3 - training | batch=10, size=224x224: 1139 ± 5 ms

10/19. ResNet-SRGAN

10.1 - inference | batch=10, size=512x512: 682 ± 25 ms

10.2 - inference | batch=1, size=1536x1536: 459 ± 2 ms

10.3 - training | batch=5, size=512x512: 721 ± 3 ms

11/19. ResNet-DPED

11.1 - inference | batch=10, size=256x256: 615 ± 2 ms

11.2 - inference | batch=1, size=1024x1024: 1314 ± 3 ms

11.3 - training | batch=15, size=128x128: 809 ± 2 ms

12/19. U-Net

12.1 - inference | batch=4, size=512x512: 1213 ± 4 ms

12.2 - inference | batch=1, size=1024x1024: 1240 ± 4 ms

12.3 - training | batch=4, size=256x256: 1061 ± 3 ms

13/19. Nvidia-SPADE

13.1 - inference | batch=5, size=128x128: 660 ± 2 ms

13.2 - training | batch=1, size=128x128: 850 ± 2 ms

14/19. ICNet

14.1 - inference | batch=5, size=1024x1536: 970 ± 75 ms

14.2 - training | batch=10, size=1024x1536: 3848 ± 138 ms

15/19. PSPNet

15.1 - inference | batch=5, size=720x720: 2350 ± 4 ms

15.2 - training | batch=1, size=512x512: 817 ± 3 ms

16/19. DeepLab

16.1 - inference | batch=2, size=512x512: 637 ± 2 ms

16.2 - training | batch=1, size=384x384: 663 ± 2 ms

17/19. Pixel-RNN

17.1 - inference | batch=50, size=64x64: 2080 ± 15 ms

17.2 - training | batch=10, size=64x64: 6810 ± 67 ms

18/19. LSTM-Sentiment

18.1 - inference | batch=100, size=1024x300: 2260 ± 498 ms

18.2 - training | batch=10, size=1024x300: 2710 ± 11 ms

19/19. GNMT-Translation

19.1 - inference | batch=1, size=1x20: 546 ± 13 ms

Device Inference Score: 2116

Device Training Score: 2322

Device AI Score: 4438